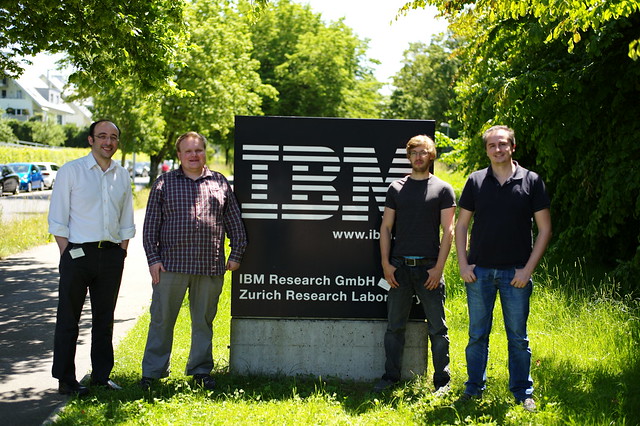

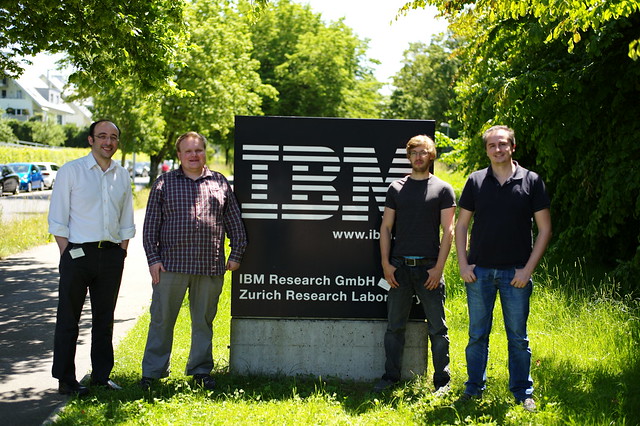

Pavel Klavik,IBM and Charles University in Prague;

Yves Ineichen, IBM and Cristiano Malossi, IBM

|

| From left to right: HPC scientists Costas Bekas, IBM; Pavel Klavik,IBM and Charles University in Prague; Yves Ineichen, IBM and Cristiano Malossi, IBM |

Labels: bluegene, HPC, IBM Research - Zurich, supercomputer